LeNet:Gradient-Based Learning Applied to Document Recognition

首次利用卷积神经网络进行图片分类的,网络比较简单,就是 5 层,2 个卷积层,2 个池化层和 1 个全连接层

什么是 LeNet?

![]()

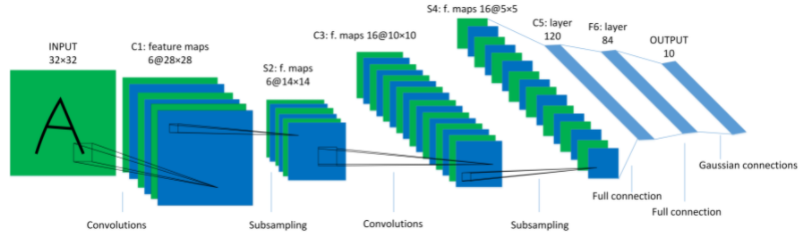

- LeNet-5 是由 提出的一种用于识别手写数字和机器印刷字符的卷积神经网络(Convolutional Neural Network,CNN),其命名来源于作者 的名字,5 则是其研究成果的代号,在 LeNet-5 之前还有 LeNet-4 和 LeNet-1 鲜为人知

- LeNet-5 阐述了图像中像素特征之间的相关性能够由参数共享的卷积操作所提取,卷积 -> 下采样(池化)-> 非线性映射这样的组合结构是当前流行的大多数深度图像识别网络的基础

- 缺点:(1) 设计较为简单,因此其处理复杂数据的能力有限;(2) 全连接层的计算代价过大,以后使用卷积层替代

LeNet 的网络结构?

![]()

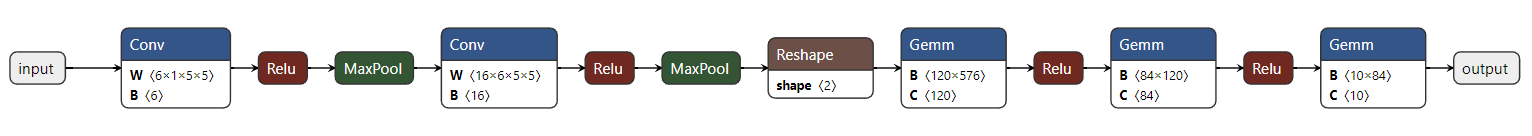

LeNet5 包括 2 个卷积层、2 个下采样层和 3 个全连接层

如何使用 Pytorch 定义分类模型 LeNet?

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38# Defining the network (LeNet-5)

class LeNet5(torch.nn.Module):

def __init__(self):

super(LeNet5, self).__init__()

# Convolution (In LeNet-5, 32x32 images are given as input. Hence padding of 2 is done below)

self.conv1 = torch.nn.Conv2d(in_channels=1, out_channels=6, kernel_size=5, stride=1, padding=2, bias=True)

# Max-pooling

self.max_pool_1 = torch.nn.MaxPool2d(kernel_size=2)

# Convolution

self.conv2 = torch.nn.Conv2d(in_channels=6, out_channels=16, kernel_size=5, stride=1, padding=0, bias=True)

# Max-pooling

self.max_pool_2 = torch.nn.MaxPool2d(kernel_size=2)

# Fully connected layer

# self.fc1 = torch.nn.Linear(16*5*5, 120) # 28*28输入时使用

self.fc1 = torch.nn.Linear(576, 120) # convert matrix with 16*5*5 (= 400) features to a matrix of 120 features (columns)

self.fc2 = torch.nn.Linear(120, 84) # convert matrix with 120 features to a matrix of 84 features (columns)

self.fc3 = torch.nn.Linear(84, 10) # convert matrix with 84 features to a matrix of 10 features (columns)

def forward(self, x):

# convolve, then perform ReLU non-linearity

x = torch.nn.functional.relu(self.conv1(x))

# max-pooling with 2x2 grid

x = self.max_pool_1(x)

# convolve, then perform ReLU non-linearity

x = torch.nn.functional.relu(self.conv2(x))

# max-pooling with 2x2 grid

x = self.max_pool_2(x)

# first flatten 'max_pool_2_out' to contain 16*5*5 columns

# read through https://stackoverflow.com/a/42482819/7551231

# x = x.view(-1, 16*5*5) # 28*28输入时使用

x = x.view(-1, 576)

print(x.shape)

# FC-1, then perform ReLU non-linearity

x = torch.nn.functional.relu(self.fc1(x))

# FC-2, then perform ReLU non-linearity

x = torch.nn.functional.relu(self.fc2(x))

# FC-3

x = self.fc3(x)

return x![]()

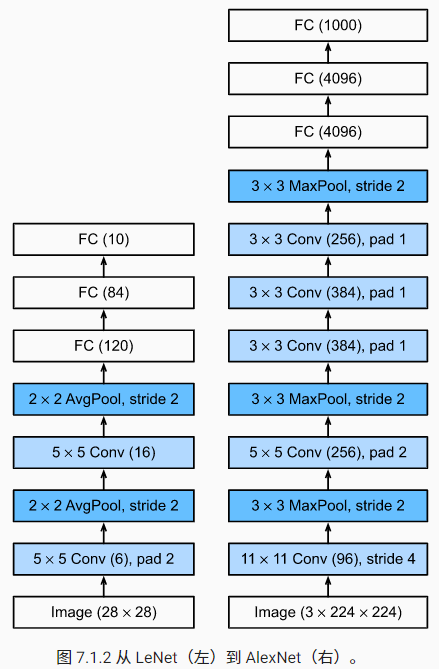

LeNet 与 AlexNet 在网络结构上有什么差异?

![]()

- LeNet5: 包括 2 个卷积层、2 个下采样层和 3 个全连接层

- AlexNet:5 个卷积层、2 个全连接隐藏层和 1 个全连接输出层